Storage systems¶

The Apocrita Research Data Storage has a capacity of over five petabytes. This uses the IBM Storage Scale high-performance clustered file system which provides concurrent high-speed file access to your data.

The storage system is connected to the QMUL Enterprise backup solution (IBM Storage Protect) to provide a high level of data resilience.

Home directories¶

All users have a home directory mounted under /data/home (e.g.

/data/home/abc123). This is networked storage, accessible from

all nodes in the cluster and is backed up nightly. This space is limited to

100GB per user. Avoid using this space as a destination for

data generated by cluster jobs, as home directories fill up quickly and

exceeding your quota will cause your jobs to fail.

Research Group storage space¶

Research Groups, Projects or Labs can have storage allocated to their group.

The first 1TB is provided free of charge. Research Groups that have multiple projects, or groups can have multiple shares, however the free 1TB is only allocated once per Research Group. Additional Storage space can be purchased as necessary.

If you do not have this set up for your group, please complete our storage request form. If the share already exists and you require access to it, please email its-research-support@qmul.ac.uk and also include your P.I. so we can get consent.

In addition to data storage for users, Apocrita also stores some commonly used public datasets, to avoid unnecessary duplication of datasets.

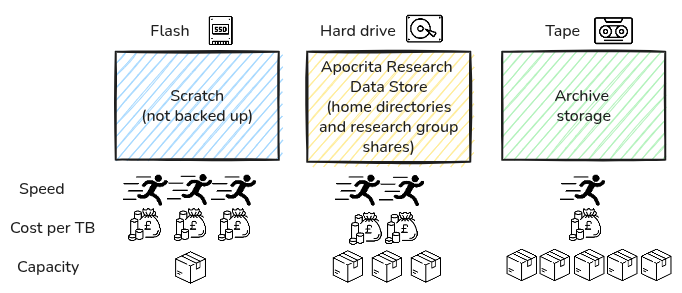

Each storage option provides different features, for varied use-cases.

Scratch¶

Scratch space is used for temporary storage of files, and is not backed up. We recommend you use this for working data generated by cluster jobs. The jobs will likely run faster due to the higher performance of this system. All users have a 3TB quota in scratch by default, however files are automatically deleted 65 days after the last modification.

Archive Storage¶

Research groups often have large amounts of infrequently accessed data that still needs to be kept. The Archive Storage is a low cost, high capacity tape-based archive system that allows data owners to deposit and recall data from the archive themselves.

Typical users of the archive would be those who have data in excess of 5TB that is typically not accessed for months at a time. Cost of the storage is £15/TB per year. Data needs preparation before it is stored in the archive.

Local disk space on nodes - $TMPDIR¶

We would typically recommend using the scratch system where possible, however there are sometimes edge-cases that perform badly on anything except local storage. There is temporary space available on the nodes that can be used when you submit a job to the cluster. The size of this storage per node type is listed on the individual node types page.

As this storage is physically located on the nodes, it cannot be shared between them, but for certain I/O intensive workloads, it might provide better performance than networked storage. Examples are on the Using $TMPDIR page.

Additional storage¶

Additional backed-up storage is available for purchase at £75 per 1TB per annum.

This can be arranged by submitting an email to its-research-support@qmul.ac.uk and including an Agresso budget code.

Additional storage is allocated to Group shares rather than personal home directories or scratch space.