Array jobs¶

A common requirement is to be able to run the same job a large number of times, with different input parameters. Whilst this could be done by submitting lots of individual jobs, a more efficient and robust way is to use an array job. Using an array job also allows you to circumvent the maximum jobs per user limitation, and manage the submission process more elegantly.

Arrays can be thought of as a for loop:

for NUM in 1 2 3

do

echo $NUM

done

Is equivalent to:

#!/bin/bash

#$ -cwd

#$ -pe smp 1

#$ -l h_vmem=1G

#$ -j y

#$ -l h_rt=1:0:0

#$ -t 1-3

echo ${SGE_TASK_ID}

Here the -t flag configures the number of iterations in your qsub script and

the counter (the equivalent of $NUM in the for loop example) is

$SGE_TASK_ID.

To run an array job use the -t option to specify the range of tasks to run.

Now, when the job is run the script will be run with $SGE_TASK_ID set to each

value specified by -t. The values for -t can be any integer range, with the

option to increase the step size. In the following example,: -t 20-30:5 will

produce 20 25 30 and run 3 tasks.

#!/bin/bash

#$ -cwd

#$ -pe smp 1

#$ -l h_vmem=1G

#$ -j y

#$ -l h_rt=1:0:0

#$ -t 20-30:5

echo "Sleeping for ${SGE_TASK_ID} seconds"

sleep ${SGE_TASK_ID}

The only difference between the individual tasks is the value of the

$SGE_TASK_ID environment variable. This value can be used to reference

different parameter sets etc. from within a job.

Output files for array jobs will include the task id to differentiate output from each task e.g.

testarray.o123.1

testarray.o123.2

testarray.o123.3

Running single task from array job

If you need to run a single task from array job (for example 5th task of 100

hit the execution time limit and you wish to run it again) you can pass

number of task with -t key when submitting: qsub -t 5 array_job.sh

Email notifications and large arrays

Please ensure that job email notifications are not enabled in job scripts for arrays with lots of tasks, as the sending of a large number of email messages causes problems with the receiving mail servers, and even service disruption.

Processing files¶

If you need to process lots of files, then you can set up an appropriate list

using ls -1. e.g. if your files are all named EN<something>.txt :

ls -1 EN*.txt > list_of_files.txt

Now find out how many files there are:

$ wc -l list_of_files.txt

35 list_of_files.txt

Then set the -t value to the appropriate number:

#$ -t 1-35

You can then use sed to select the correct line of the file for each iteration:

INPUT_FILE=$(sed -n "${SGE_TASK_ID}p" list_of_files.txt)

Which results in the final script:

#!/bin/bash

#$ -cwd

#$ -pe smp 1

#$ -l h_vmem=1G

#$ -j y

#$ -l h_rt=1:0:0

#$ -t 1-35

INPUT_FILE=$(sed -n "${SGE_TASK_ID}p" list_of_files.txt)

example-program < $INPUT_FILE

Processing directories¶

Consider processing the contents of a collection of 1000 directories, called test1 to test1000.

#!/bin/bash

#$ -cwd

#$ -pe smp 1

#$ -l h_vmem=1G

#$ -j y

#$ -l h_rt=1:0:0

#$ -t 1-1000

cd test${SGE_TASK_ID}

./program < input

Tasks are started in order of the array index.

Passing arguments to an application¶

The following example runs an application with differing arguments obtained from a text file:

$ cat list_of_args.txt

-i 50 52 54 -s 10

-i 60 62 64 -s 20

-i 70 72 74 -s 30

#!/bin/bash

#$ -cwd

#$ -pe smp 1

#$ -l h_vmem=1G

#$ -j y

#$ -l h_rt=1:0:0

#$ -t 1-3

INPUT_ARGS=$(sed -n "${SGE_TASK_ID}p" list_of_args.txt)

./program $INPUT_ARGS

Would result in 3 job tasks being submitted, using a different set of input arguments, specified on each line of the text file.

Task concurrency¶

Task concurrency (-tc N) is the number of array tasks allowed to run at the

same time, this can be used to limit the number of tasks running for larger

jobs, and jobs that may impact storage performance.

If you are running code that would possibly read or write to the same files on

the filesystem, you may need to use this option to avoid filesystem blocking.

Also, large numbers of jobs starting or finishing at the same moment puts an

extra load on the scheduler using the tc throttle can limit this.

#!/bin/bash

#$ -cwd # Run the code from the current directory

#$ -pe smp 1

#$ -l h_vmem=1G

#$ -j y # Merge the standard output and standard error

#$ -l h_rt=1:0:0 # Limit each task to 1 hr

#$ -t 1-1000

#$ -tc 5

cd test${SGE_TASK_ID}

./program < input

You can alter the tc value while the job is running with qalter. For

example, to change the concurrency of an array job to a value of ten:

qalter -tc 10 <jobid>

Writing job output to an alternative location¶

Each array task will write its own output and error job files (or a single

output file per task if #$ -j y is used). If the #$ -cwd parameter is also

used, expect to see many job output files in the same directory as your

submission script. This may not be desirable, especially if the array job is

large.

One method to avoid this is to change the location of the job output files. To

achieve this, simply add the #$ -o LOCATION (and #$ -e LOCATION if not

using #$ -j y), where LOCATION is an absolute or relative path to a directory

which exists before the job is submitted.

For example, to redirect the job output files to a directory called logs in

the same directory as the submission script, firstly create the logs

directory, then change your submission script to include the following:

#$ -o logs/

#$ -e logs/

If running several similar array jobs, consider creating a new sub-directory

inside logs for each array job, and redirecting the output to that directory

instead of the main logs directory.

Holding specific tasks from a queued array job¶

The qhold command (see

man qhold) will temporarily

place a hold on queued jobs to stop them from starting. A hold applied to a

currently running job will continue to run and will not be halted. This can be

useful if you notice a lot of your jobs are failing - holding jobs will stop

potential failures from other queued tasks within the same array job.

For example, to hold tasks 20-60 of job 3388, to stop them from starting, run:

qhold 3388.20-60

After applying a hold, these tasks will never run until released with qrls

(see the man qrls). Held jobs

and tasks will be displayed in hqw state when running qstat.

Deleting specific tasks from a queued array job¶

To delete certain tasks from an array, use the -t option for qdel.

For example, to delete tasks 20-60 of job 3388, run:

qdel 3388 -t 20-60

This will delete the tasks regardless if they are running, queued or held.

Re-submitting specific tasks from an array job¶

If specific tasks in your array job did not complete successfully or were prematurely deleted before they could run, you may want to re-submit those tasks; rather than re-submitting the entire array, you can re-submit specific tasks after correcting the original issue.

For example, to re-submit tasks 2-5, 17 and 35-36 from the original 60-task

array, either modify the -t parameter in your job script for each task range

and re-submit, or override the -t parameter on the qsub line, as shown

below:

qsub -t 2-5 example.sh

qsub -t 17 example.sh

qsub -t 35-36 example.sh

The task id range specified in the -t option argument may be a single

number or a single range.

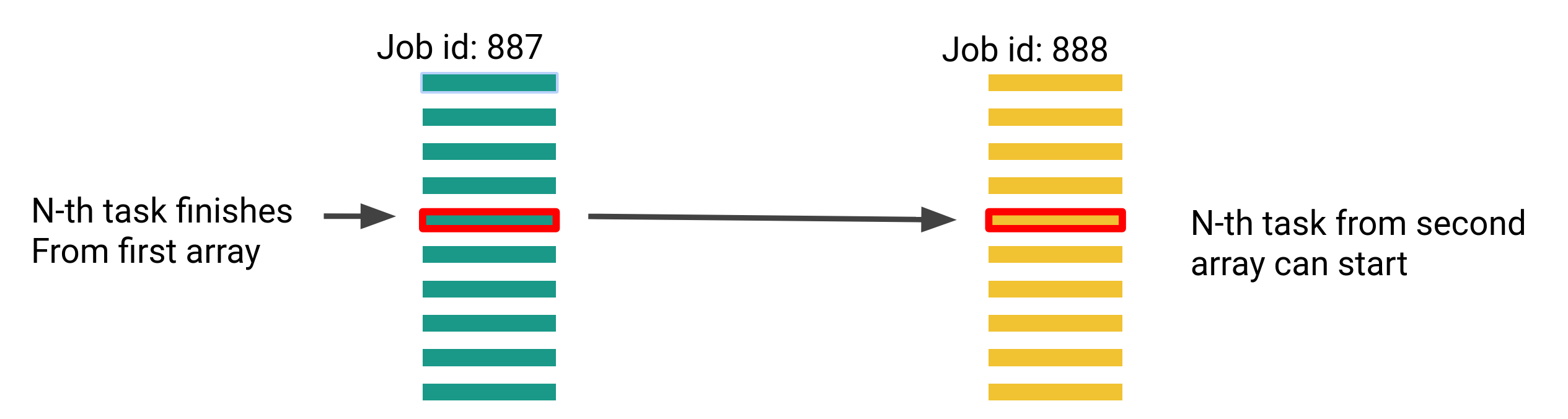

Queuing dependent array tasks¶

If you have two array jobs of the same size and your workflow contains a

dependency where task N from array 2 must run after task N from array 1, you

may use the -hold_jid_ad parameter to achieve this.

An example use case could be that the second array does some processing after the results of the first array, and that you don't want to wait for the entire first array to complete, before the second array starts - this is specifically useful if your array jobs are large.

The following demonstrates an array task dependency for two jobs (887 and 888) each with 10,000 tasks, and a task concurrency of 5:

qsub -t 1-10000 -tc 5 array1.sh

qsub -t 1-10000 -tc 5 -hold_jid_ad 887 array2.sh

We should expect to see tasks 1-5 running from array 887 initially, and when one of those 5 tasks complete, the respective task from array 888 will start running. The next task in 887 will also start running, resources permitted.

Below shows the respective qstat output (some fields removed for brevity):

job-ID prior name state queue slots ja-task-ID

----------------------------------------------------------------------

887 15.00000 array1 r all.q@NODE 1 1

887 15.00000 array1 r all.q@NODE 1 2

887 15.00000 array1 r all.q@NODE 1 4

887 15.00000 array1 r all.q@NODE 1 5

887 15.00000 array1 r all.q@NODE 1 5

887 15.00000 array1 r all.q@NODE 1 6

888 15.00000 array2 r all.q@NODE 1 3

887 0.00000 array1 hqw 1 7-10000

888 0.00000 array1 qw 1 1,2

888 0.00000 array1 qw 1 4-10000

Need help?¶

If you need help writing or using array job submission scripts, please see Getting Help.