Using DeepLabCut via OnDemand¶

DeepLabCut is a toolbox for markerless pose estimation of animals performing various tasks. Using DeepLabCut on Apocrita via OnDemand allows you to run a GUI interface. Please refer to the overview section for instructions on how to login to OnDemand.

Pre-trained models

DeepLabCut will automatically download any required pre-trained models to

$HOME/.config/deeplabcut/<version>. Whilst these shouldn't take up a lot

of space, it might be wise to monitor this directory in case it fills up.

Starting a DeepLabCut session¶

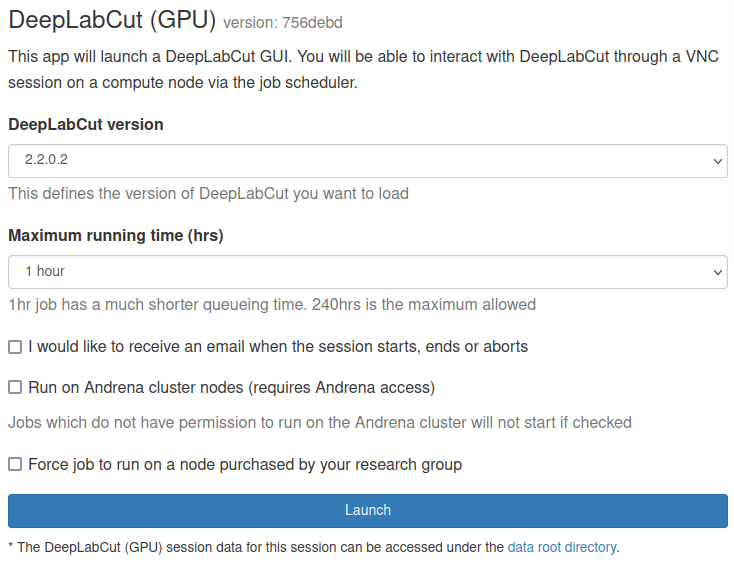

Select DeepLabCut (GPU) from the GUIs list of the Interactive Apps drop-down menu, or from the My Interactive Sessions page. Before using DeepLabCut, researchers need to request access to the GPU nodes, or have access to the DERI/Andrena GPU nodes.

Choose the resources your job will need. Choosing a 1 hour maximum running time is the best option for getting a session quickly, unless you have access to owned nodes which may offer sessions immediately for up to 24hrs if resources are available.

DeepLabCut only uses one GPU

DeepLabCut only uses one GPU therefore, the form options "number of cores" and "number of GPUs" have been removed from the OnDemand application. All DeepLabCut OnDemand jobs will be submitted with 1 GPU.

Once clicking Launch, the request will be queued, and when resources have been allocated, you will be presented with the option to connect to the session by clicking on the blue Launch DeepLabCut (GPU) button.

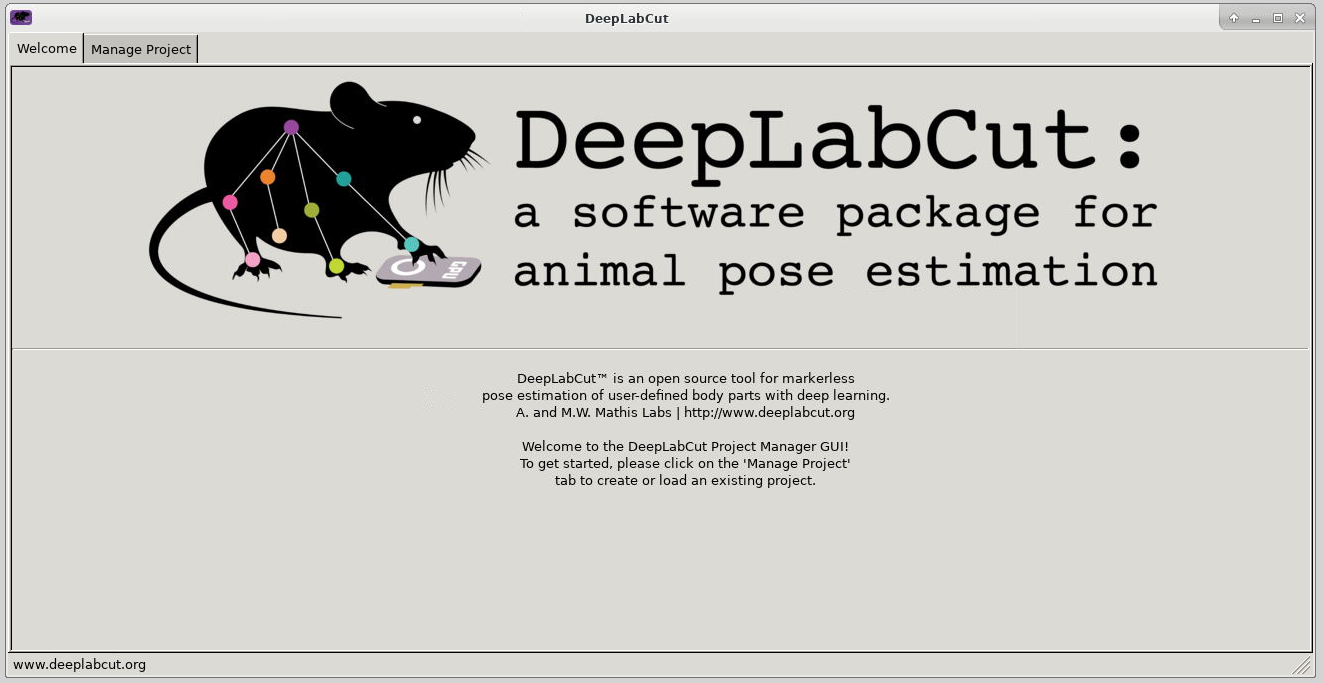

Once connected, the familiar DeepLabCut interface is presented, and you will be able to use the allocated resources, and access your research data located on Apocrita.

GUI initialisation time

Due to the large number of dependencies being loaded to present the DeepLabCut GUI, the application may not be immediately displayed. Please allow up to 5 minutes for the GUI to be loaded before contacting us for assistance.

Exiting the session¶

If a session exceeds the requested running time, it will be killed. You can finish your session using one of the following methods:

- clicking [x] in the upper right corner of the DeepLabCut application

- clicking the red Delete button for the relevant session on the My Interactive Sessions page.

After a session ends, the resources will be returned to the cluster queues.

Using DeepLabCut inside Jupyter Notebook¶

DeepLabCut Jupyter Notebook is a standalone app

Do not use the Jupyter Notebook (GPU) OnDemand application with DeepLabCut. The required DeepLabCut Conda environment will not be available.

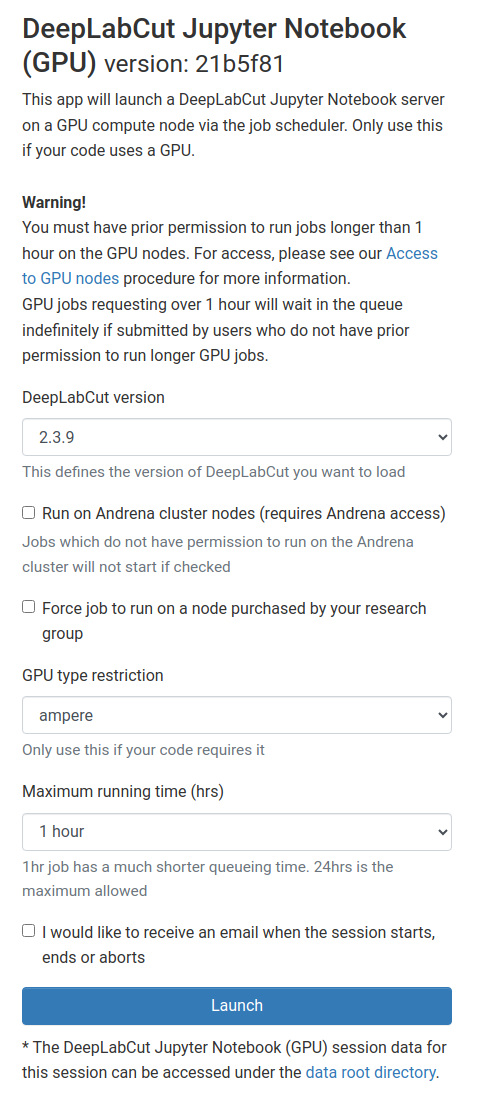

Select DeepLabCut Jupyter Notebook (GPU) from the GUIs list of the Interactive Apps drop-down menu, or from the My Interactive Sessions page. Before using DeepLabCut, researchers need to request access to the GPU nodes, or have access to the DERI/Andrena GPU nodes.

Choose the resources your job will need. Choosing a 1 hour maximum running time is the best option for getting a session quickly, unless you have access to owned nodes which may offer sessions immediately for up to 24hrs if resources are available.

DeepLabCut only uses one GPU

DeepLabCut only uses one GPU therefore, the form options "number of cores" and "number of GPUs" have been removed from the OnDemand application. All DeepLabCut OnDemand jobs will be submitted with 1 GPU.

Once clicking Launch, the request will be queued, and when resources have been allocated, you will be presented with the option to connect to the session by clicking on the blue Launch DeepLabCut Jupyter Notebook (GPU) button.

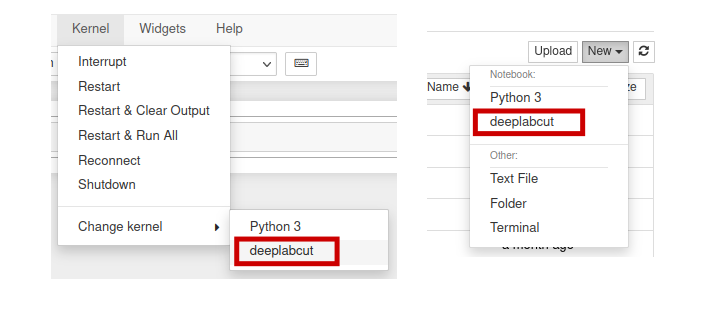

Once in Jupyter Notebook, you will notice that the

DEEPLABCUT conda environment is available under the

Kernel -> Change Kernel menu, with the name Python [conda env:DEEPLABCUT].

Similarly, it will be available as an option under the File -> New notebook

menu.

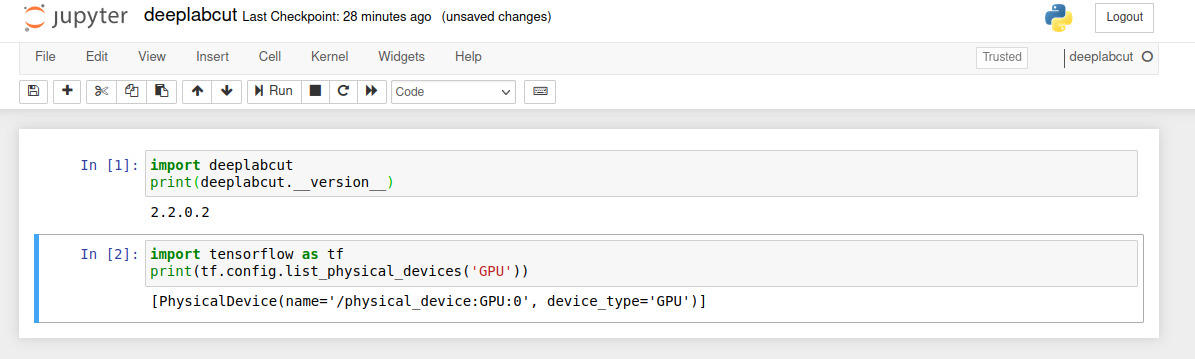

Here is an example notebook importing the DEEPLABCUT environment, printing

the version and also the assigned GPU device:

When you have finished your analysis on Jupyter Notebook, please remember to delete your job by clicking the red Delete button within the OnDemand appliance to return the requested resources to the cluster queues.