Using RELION via OnDemand¶

Avoid leaving sessions idling

Please be respectful of other cluster users - once you have finished an OnDemand session for RELION, please exit it fully, freeing up the resources for other cluster users. Idling sessions showing no compute will be cleared by the ITSR admin team periodically.

RELION (for REgularised LIkelihood OptimisatioN) is a stand-alone computer program for Maximum A Posteriori refinement of (multiple) 3D reconstructions or 2D class averages in cryo-electron microscopy. Using RELION on Apocrita via OnDemand allows you to run a GUI interface. Please refer to the overview section for instructions on how to login to OnDemand.

Starting a RELION session¶

The CPU version of RELION is limited

Some aspects of RELION, particularly things like the ModelAngelo building in the recent RELION 5 subtomogram averaging pipeline will only work on a GPU node.

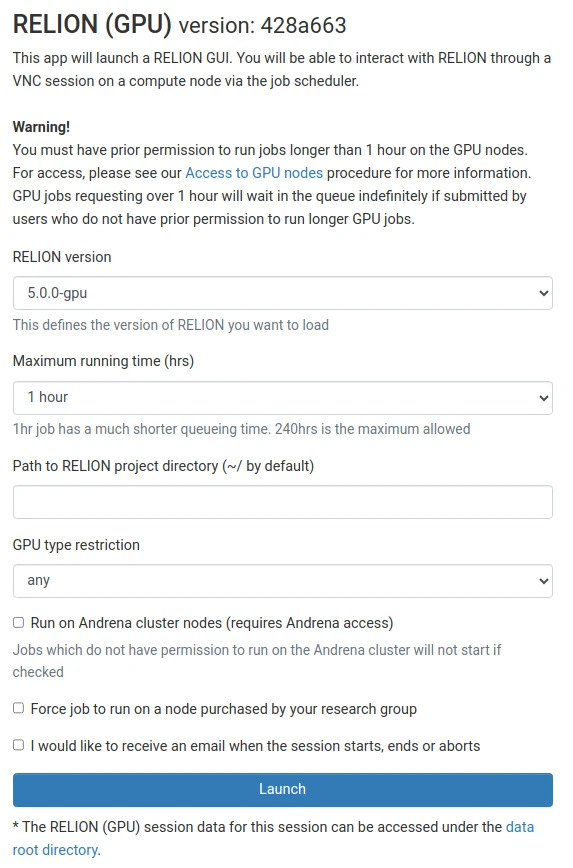

Select RELION from the GUIs list of the Interactive Apps drop-down menu, or from the My Interactive Sessions page to launch the RELION GUI on a CPU or GPU node.

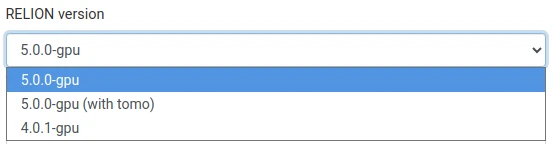

RELION 5 has a regular option (5.0.0-cpu/5.0.0-gpu) and a new

subtomo-gram averaging pipeline

(5.0.0-cpu (with tomo)/5.0.0-gpu (with tomo)) option. The Graphical User

Interface (GUI) for each option is different, so please select carefully:

Choose the resources your job will need. Choosing a 1 hour maximum running time is the best option for getting a session quickly, unless you have access to owned nodes which may offer sessions immediately for up to 24hrs if resources are available.

Project directory

You need to enter a path to your project directory (where project files are located) or your session will be started in your home directory by default.

Once clicking Launch, the request will be queued, and when resources have been allocated, you will be presented with the option to connect to the session by clicking on the blue Launch RELION button.

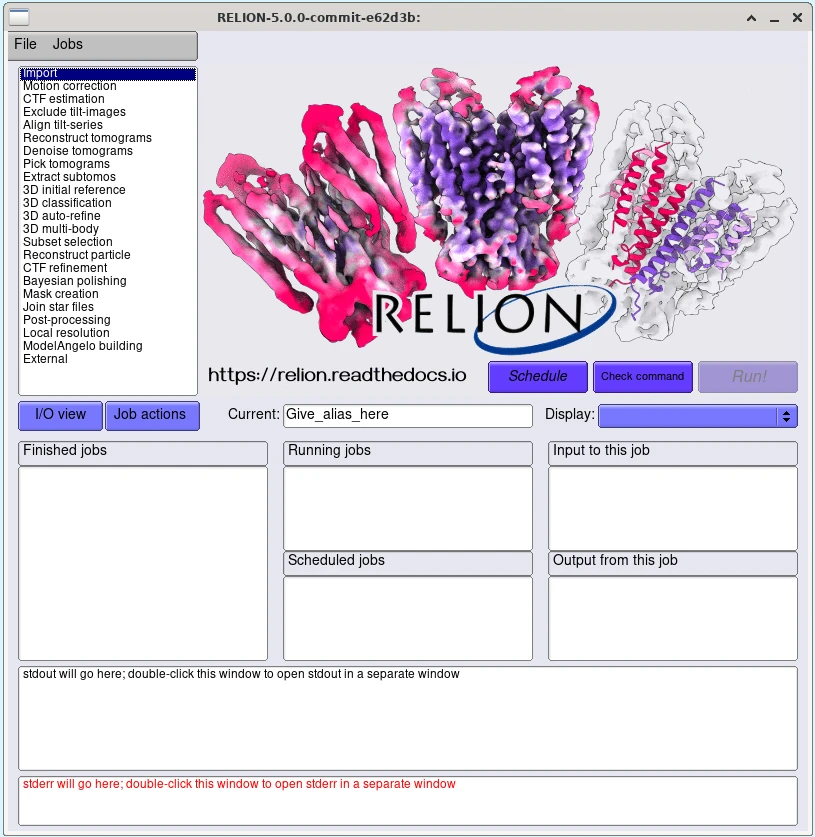

Once connected, the familiar RELION interface is presented, and you will be able to use the allocated resources, and access your research data located on Apocrita.

Selecting the correct CPU and GPU count¶

WARNING

By default, many of the RELION jobs in the GUI will make poor default choices regarding CPU threading, MPI processes and GPU counts. Please ensure you set these to match the resources allocated to your session as detailed below. And please monitor both GPU usage and CPU core usage throughout your session.

RELION MPI logic is very complicated. We suggest all users look over the following references for a more thorough explanation, and for troubleshooting errors such as running out of video RAM (VRAM) or system RAM:

- RELION on Biowulf

- MRC Laboratory of Molecular Biology (LMB) RELION documentation

- GitHub issue from RELION maintainer explaining MPI procs and threads

GPU job types¶

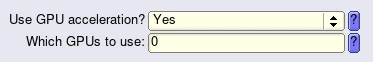

"GPU selection"¶

For GPU jobs, please ensure you identify only the allocated GPU device(s) for your session (as a comma-separated list if your session has multiple GPUs available) in the "Which GPUs to use" box under the "Compute" tab for the job type:

For a single GPU session, this will be 0; see

here for a table detailing GPU device

numbering logic.

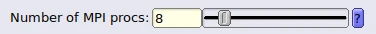

"Number of MPI procs" and "Number of threads"¶

For most GPU accelerated jobs (e.g. 3D classification, 3D multi-body), you should calculate the "Number of MPI procs" under the "Running" tab for the job using the following formula:

- 1 "Leader" MPI process

- 1 "Follower" MPI process per GPU

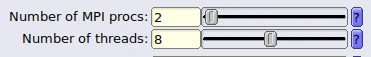

The first "Leader" MPI process does not perform any real calculation or utilise any CPU cores, but instead controls the MPI logic for the "Follower" MPI process(es). In most cases, this means "Number of MPI procs" should be set to 2 (1 "Leader" MPI process plus 1 "Follower" MPI process for a single GPU).

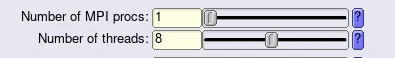

"Number of threads" should be set as a calculation of the number of cores allocated to your session multiplied by the number of GPUs allocated to your job (in most cases, this will be 8 cores * 1 GPU for a total of 8 threads).

Combined, this will look like:

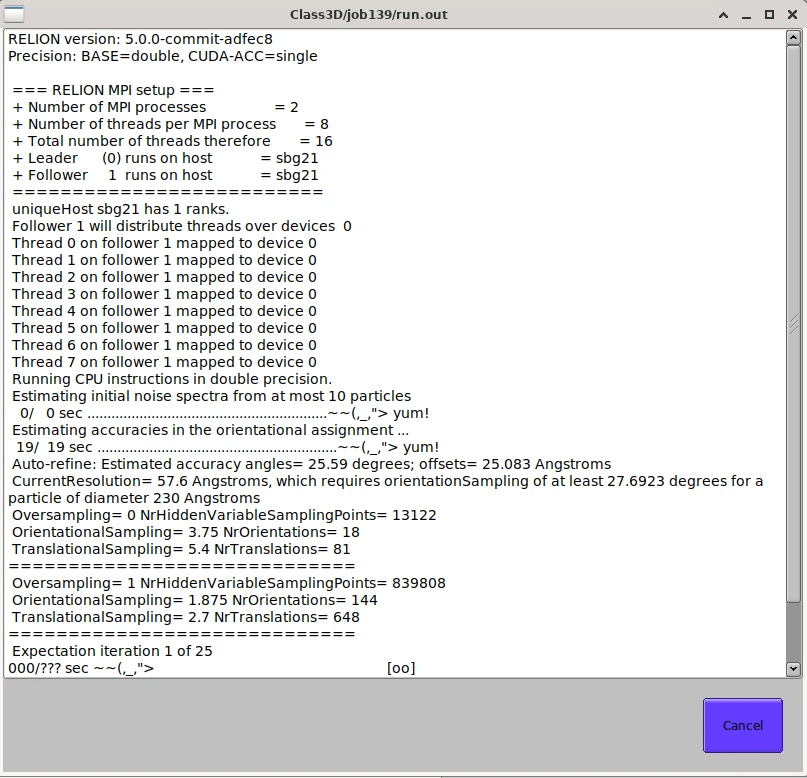

During execution of these jobs, the output window should look something like:

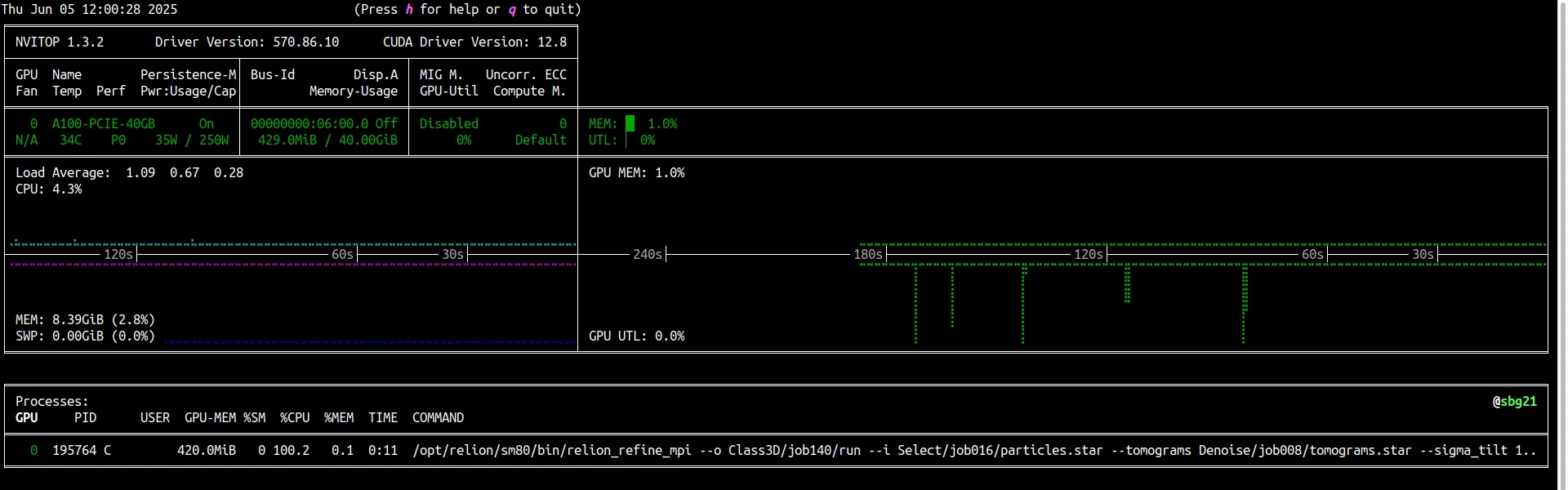

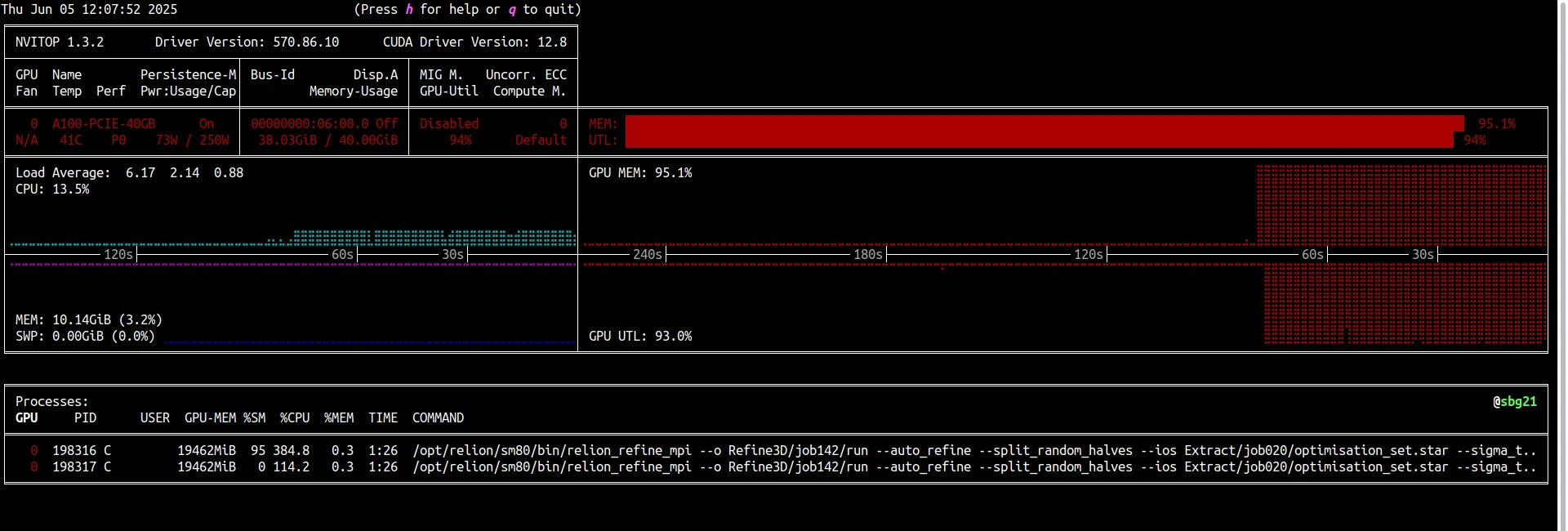

When monitoring your RELION session, you

should only see one spawned MPI process per GPU, for example in nvitop:

3D auto-refine¶

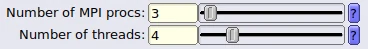

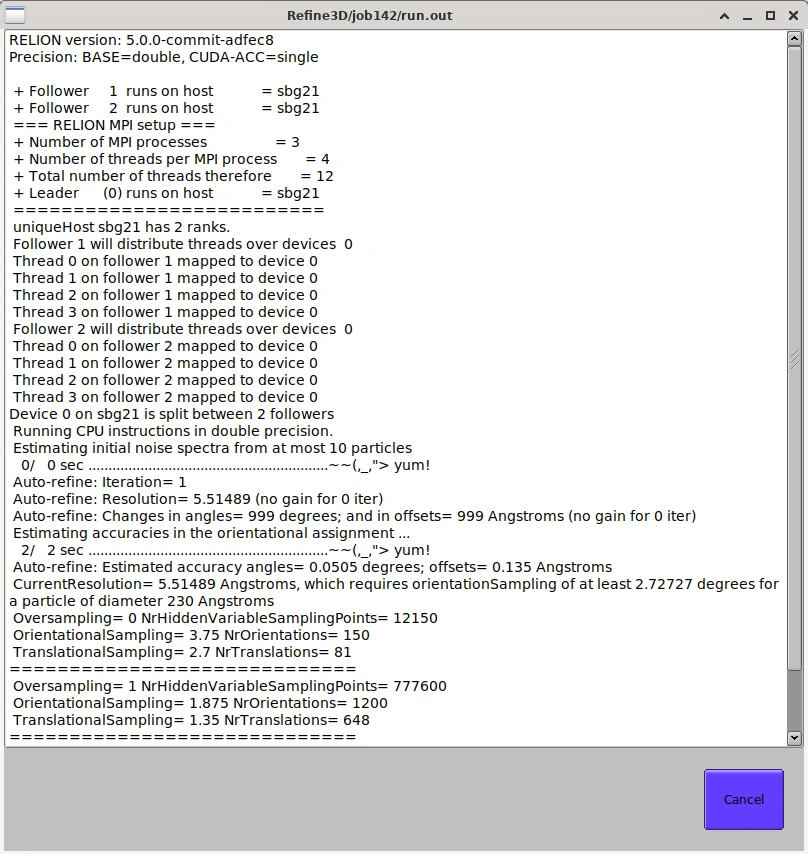

3D auto-refine needs an odd number of MPI processes, one for the first "Leader" MPI process and the same numbers for two half sets. In most cases, this means "Number of MPI procs" should be set to 3 (1 "Leader" MPI process plus 2 "Follower" MPI processes for a single GPU).

Beware of VRAM utilisation

You can put more than one MPI processes on a GPU if the GPU memory is sufficiently large. If GPU utilisation (which you can check using various tools) remains constantly low (< 70 %), you might want to try running 2 MPI processes per GPU. But GPUs with a small amount of VRAM may fail to run jobs configured in this fashion.

For example, if you are allocated 8 CPU cores for your session, you can select 3 MPI processes (1 "Leader", 2 "Followers"), and 4 threads, that's 4 threads per "Follower" MPI process:

During execution of this jobs, the output window should look something like:

And in nvitop, two MPI processes will be spawned on the GPU:

CPU job types¶

Monitor CPU core usage and threading

Please ensure you set your MPI processes and threads to match the CPU cores allocated to your session as detailed below. And please monitor CPU core usage throughout your session.

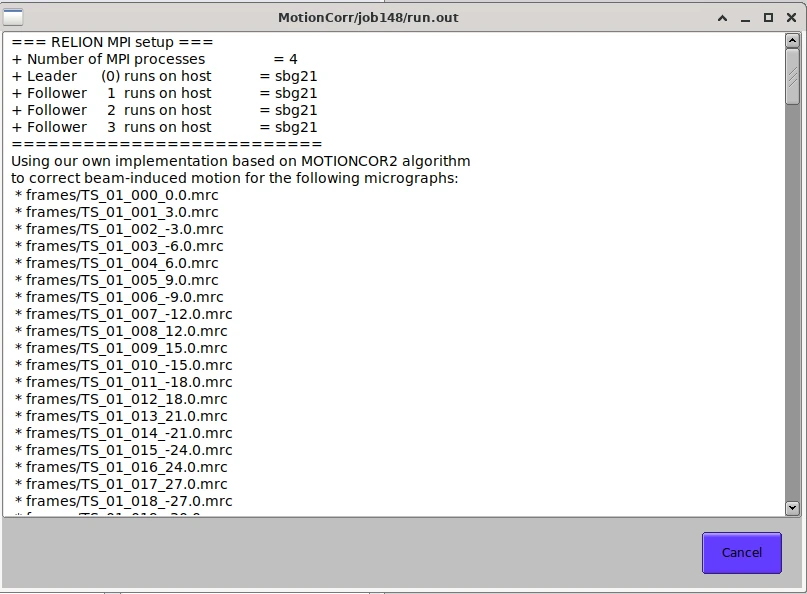

For most CPU-only jobs (e.g. Motion correction, CTF Refinement, Bayesian polishing), the first MPI "Leader" process will utilise CPU cores, unlike the GPU job types above.

For CPU-only jobs, you should choose a "Number of MPI procs" and "Number of threads" that multiplies to a total of your available CPU cores. For example, if you have 8 CPU cores available, you could set "Number of MPI procs" to 4 and "Number of threads" to 2 to total 8 and match your available cores:

Which during a job run will look something like:

In some cases, you might run out of system memory. In this case, reduce the number of MPI processes by assigning more threads per process (e.g. 2 MPI processes and 4 threads per process). The memory usage is roughly proportional to the number of MPI processes, not the total number of threads:

Some jobs (e.g. CTFFind) do not use threading. Use one MPI process per CPU (there is no option for threads). So for 8 cores, set "Number of MPI procs" to 8:

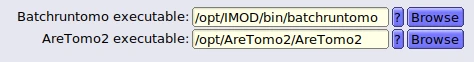

IMOD and AreTomo2¶

Some workflows such as AlignTiltSeries rely on the additional applications IMOD and AreTomo2. These are installed inside the container RELION runs from and can be found in the following paths:

- IMOD -

/opt/IMOD - AreTomo2 -

/opt/AreTomo2/AreTomo2

Ensure you enter these paths in full anywhere RELION requests them, e.g.:

Exiting the session¶

If a session exceeds the requested running time, it will be killed. You can finish your session using one of the following methods:

- clicking [x] in the upper right corner of the RELION application

- selecting File -> Quit from the application menus

- clicking the red Delete button for the relevant session on the My Interactive Sessions page.

After a session ends, the resources will be returned to the cluster queues.